The Genesis: Can Machines Think?

The formal history of Artificial Intelligence doesn't begin with code, but with a question. In 1950, Alan Turing, the British mathematician and codebreaker, published "Computing Machinery and Intelligence." He proposed what he called the "Imitation Game"—now universally known as the Turing Test. Turing's hypothesis was simple yet profound: if a machine could converse with a human so convincingly that the human could not distinguish it from another person, the machine could be said to be "thinking."

Turing’s work laid the philosophical foundation for everything that followed. He understood that intelligence wasn't necessarily about reproducing the biological brain, but about reproducing the *output* of logic. Growing up around discarded motherboards in Rural Wisconsin, I felt this same curiosity. I would look at a scavenged processor and wonder: is this just a gate, or is it a vessel? Turing proved it was both.

Six years later, the field was officially christened. In 1956, a group of scientists gathered at the Dartmouth Workshop. It was here that researchers like John McCarthy and Marvin Minsky first coined the term "Artificial Intelligence." They believed that every aspect of learning or any other feature of intelligence could be described so precisely that a machine could be made to simulate it.

The First Echoes: ELIZA and Early Chatbots

By the 1960s, the dream of a conversational machine took its first tangible form. Joseph Weizenbaum created ELIZA, which is recognized as one of the first chatbots. ELIZA was a "Rogerian psychotherapist" that used simple pattern matching to reflect user statements back at them. If you said, "I'm feeling sad," ELIZA might respond, "Why do you say you are feeling sad?"

While ELIZA was primitive—it didn't "understand" a single word—it revealed something startling about human psychology: we are desperate to anthropomorphize machines. Users found themselves pouring their hearts out to a simple script. This was an early indicator that the "Input/Output" relationship of AI would eventually become the primary interface of the world.

The Dark Ages: The AI Winter

The optimism of the 1950s and 60s eventually hit a wall. AI proved to be much harder than the pioneers anticipated. The computers of the era lacked the "compute" and "memory" necessary to handle the complexity of real-world language or vision.

This led to what historians call the AI Winter. These were periods of drastically reduced funding and public interest in AI research. Skepticism grew, and the field was relegated to the fringes of academia. During these "winters," the logic of AI was kept alive by a small group of "believers" who continued to work on Neural Networks, despite the lack of resources. They understood what I learned in the scrapyard: sometimes you have to keep the parts, even when they aren't running yet.

Strategic Victories: Deep Blue and Beyond

The thaw began in the 1990s. As hardware became more powerful, AI started to achieve narrow, tactical victories. The most iconic moment occurred in 1997, when IBM's Deep Blue defeated the world chess champion, Garry Kasparov.

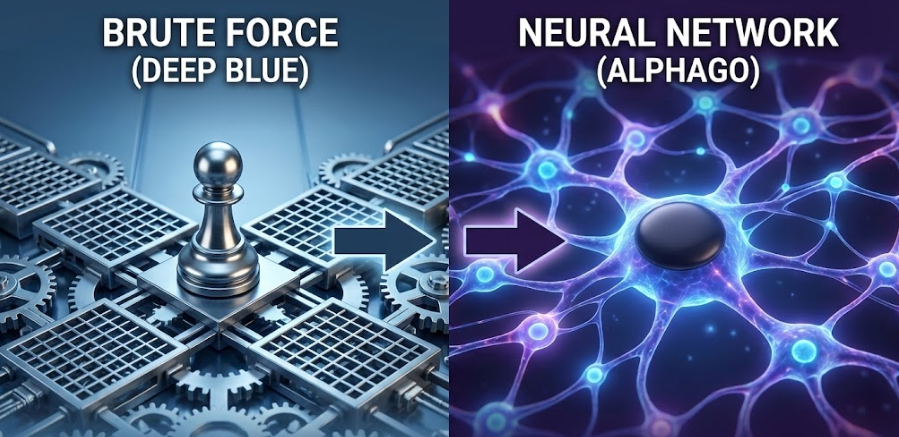

Deep Blue wasn't an LLM; it was a "Brute Force" machine. It used massive computing power to calculate every possible chess move millions of times per second. While it lacked the "fuzziness" of modern AI, it proved that machine logic could outperform human intuition in narrow, structured environments. This was the first time the world truly realized that silicon was coming for the throne of human expertise.

The Deep Learning Renaissance

The transition to modern AI—the stuff we use today—happened in 2012. This was the year of AlexNet. At the ImageNet Large Scale Visual Recognition Challenge, a team led by Alex Krizhevsky used a Convolutional Neural Network (CNN) trained on GPUs (Graphics Processing Units) to achieve a massive victory in image classification.

This was the AlexNet moment that accelerated modern AI. It proved that "Deep Learning"—stacking many layers of neural networks—was the path to true capability, provided you had the massive data and the raw GPU power to run it. The world suddenly realized that "Big Data" was the new oil, and the GPU was the engine.

A few years later, AlphaGo, developed by Google's DeepMind, achieved what many thought was impossible: defeating Lee Sedol in the game of Go in 2016. Unlike Chess, Go is a game of pattern and intuition. AlphaGo’s victory signaled that AI was no longer just about brute-force calculation; it was about "learning" through experience and deep reinforcement.

The Final Piece: The Transformer

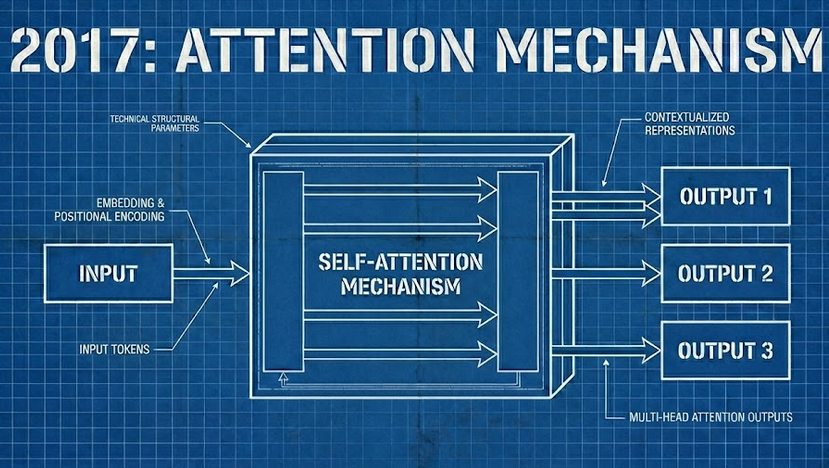

Despite all these victories, AI still struggled with language. It was slow and forgetful. Everything changed in 2017 with the publication of "Attention Is All You Need" by Google researchers.

This paper introduced the Transformer architecture. It allowed for parallel processing of text and used a "Self-Attention" mechanism to understand context. This was the mechanical breakthrough that made the current LLM revolution possible. Without the Transformer, we would still be trapped in the slow, linear processing of the previous decade.

The Consumer Explosion: OpenAI and ChatGPT

Parallel to the research breakthroughs, a new kind of institution emerged. OpenAI was founded in 2015 with the mission to ensure that Artificial General Intelligence (AGI) benefits all of humanity.

For years, it was a research lab known only to insiders. Then came late 2022. On November 30, 2022, OpenAI publicly released ChatGPT. This was the "Browser Moment." Suddenly, the massive complexity of the Generative Pre-trained Transformer (GPT) was wrapped in a simple, friendly chat interface. Within months, it became the fastest-growing consumer application in history.

ChatGPT didn't just provide answers; it provided a glimpses of a new kind of partner. It could code, write poetry, and synthesize complex ideas. It forced every human on earth to realize that we had entered a new era.

The Logos of History

As I look back on this timeline, I don't just see researchers and silicon. I see the Providence of Knowledge. From Turing's first question in 1950 to the release of ChatGPT in 2022, we have been dismantling the "Black Box" of intelligence.

This history belongs to all of us. Just as I claim stewardship of my scavenged electronics, you must claim stewardship of this history. We stand on the shoulders of giants like Turing and pioneers like the 2017 Google team. Now, the weights of the machine are in your hands.

History is not just a list of dates. It is a record of input and output. Understand the Past to Master the Future.