Owning the Brain

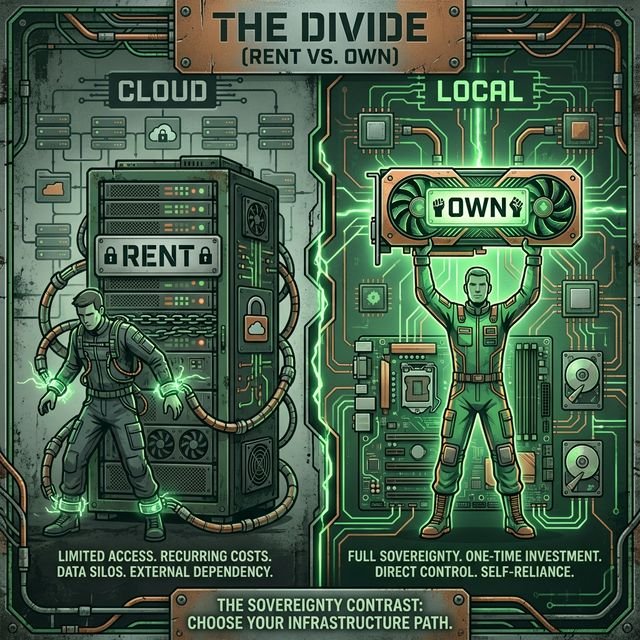

When I was a kid in Rural Wisconsin, the electronics dump was my sanctuary. I didn't see "garbage"; I saw the Ins and Outs of a civilization. I learned that if you don't own the physical hardware, you don't own the logic. If you are renting time on someone else's computer, you are subject to their rules, their censors, and their "Rural Minnesotal Chaperoning." In the digital age of 2026, the world is split between the "Renters" and the "Owners."

Cloud-based Image Gen (like Midjourney or DALL-E) is Easy to Use. You open a browser, type a prompt, and get a result. But it offers Minimal Privacy and higher cost over time. Your prompts are logged, your images are stored on corporate servers, and you are often blocked by a "Nanny AI" that decides your "In" is inappropriate. For a Sovereign Creator, this is the Glass House of the centralized web.

Because of my high-functioning autism, I have always preferred the "Isolated Logic" of a local machine. I want to know exactly what is happening inside the case. Local Image Gen (like Stable Diffusion or Flux) requires a Powerful GPU with High VRAM, but it gives you Total Control, zero subscription fees, and Absolute Privacy for your prompts. Ownership of the compute means ownership of the art.

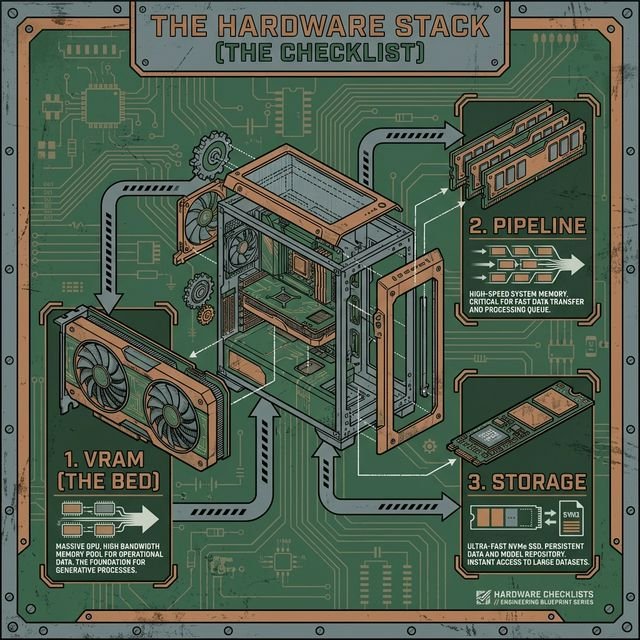

Tactical Insight: The Hardware Checklist

To run professional-grade image gen "Inside" your own walls, you need a high-authority hardware foundation. This isn't just about speed; it's about the Capacity for Intelligence.

- VRAM (The Bed of Weights): To an image generation user, VRAM is the "Bed" where the model weights must fit to run locally. For standard local models, 8GB-12GB is the bare minimum, but 16GB is where the experience becomes smooth. If you want to use the high-fidelity Flux.1 [dev] model, 24GB (from an RTX 3090 or 4090) is the High-Authority Sweet Spot.

- System RAM (The Pipeline): Do not skimp on your system RAM. 32GB is the baseline; 64GB is better for 2026 workflows. When you load a 20GB model from an NVMe Drive into RAM, you are setting the stage for Inference Speed.

- The Inference Engine: This is the software, like ComfyUI or Automatic1111, that runs the AI models locally. It is the "Dashboard" for your hardware, allowing you to steer the latent space with precision.

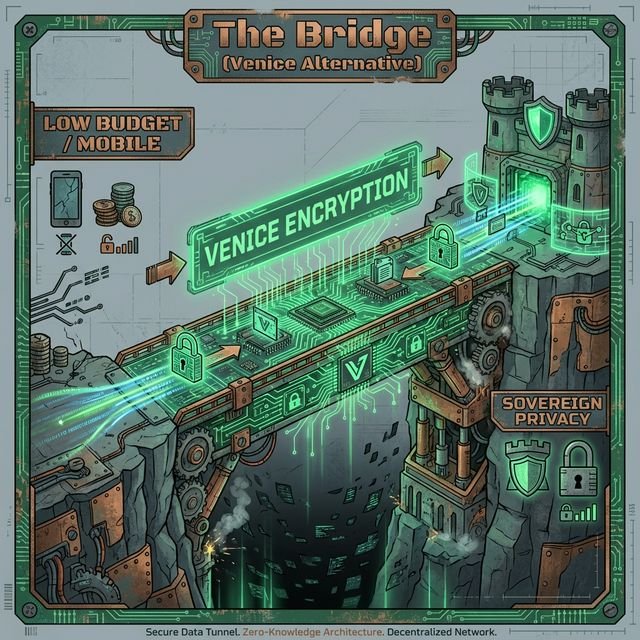

The Venice AI Alternative

I realize that not everyone has the physical space or the current budget to procure, store, and maintain a 24GB VRAM workstation. It's a massive "In" on hardware costs. For those with limited physical hardware who still demand Visual Sovereignty, Venice AI is the bridge.

Venice is an option for Privacy in the Cloud. It uses Private Zero Knowledge Cloud AI, meaning that while the image is generated on their industrial servers, they cannot see your prompts or the resulting art. It offers the Ease of Use of a cloud service with the Zero Censorship of a local model. It is the tactical choice for creators who are "Mobile and Private."

This is vital for our Data Sovereignty. Whether you are running the weights on your own silicon or using an encrypted pipeline like Venice, you are avoiding the Data Harvesting that fuels the centralized AI giants. You are Building Sovereign.

Model Swapping and Aesthetics

One of the greatest freedoms of the local stack is Model Swapping. You aren't limited to a single "Corporate Vibe." You can change between different fine-tuned local models to get a different aesthetic. If The Venice AI Image Generation Suite (which is cloud-based but sovereign) isn't giving you the gritty, historical "Out" you need, you can swap to High-Inference Models or a community fine-tune from a site like Civitai.

Local models can be 'Unfiltered', meaning they haven't been lobotomized by Censorship Guardrails. If you are doing Crime Scene Reconstruction or medical visualization, you need a model that can render blood, injuries, and anatomy without screaming "Security Violation." Local AI understands that Context is Everything.

Faith, Stewardship, and Scavenging

As a follower of Jesus Christ, I believe that we are called to be Faithful Stewards of the things we own. "The earth is the Lord’s, and everything in it." Running AI locally is a form of Digital Stewardship. I am a massive fan of the "Scavenger Build"—buying used Enterprise GPUs or server-grade parts to build powerful Inference Engines on a budget.

Taking a discarded workstation from a corporate liquidation and turning it into a Creative Powerhouse is an act of restoration. It's the "Ins and Outs" of redemption. I treat my local hardware with the same respect I treat any gift from God. I don't settle for the "Rent State." I want to be Sovereign in my work and my service.

My autism makes me obsessive about Hardware Optimization. I will spend hours tuning VRAM offsets and Quantization settings to squeeze every bit of "Intelligence" out of a budget card. This isn't just about saving money; it's about the Integrity of the Build. We are "Version-Locked" only by our willingness to learn.

The Tradeoff: Ease of Use vs Sovereignty

There are no free lunches in compute. The tradeoff of Local vs Cloud is Ease of Use / Hardware Cost vs Sovereignty / Privacy. Running locally can be frustrating. Drivers crash, software needs updating, and you will inevitably run out of storage. But the reward is Total Artistic Independence.

In 2026, as Censorship becomes more aggressive across the web, having the ability to generate images Off-Grid is a strategic necessity. It's why I advocate for Local Setup in every forensic or creative department I advise. If you don't own the weights, you don't own the truth.

For more on the underlying mechanics of how these weights are compressed to fit on consumer hardware, see our guide on AI Quantization.

Summary: Your Hardware is Your Freedom

From the Rural Wisconsin dumpsters to Sovereign Digital Infrastructure, my journey has taught me that Freedom is Physical. It lives in the silk-screened traces of your GPU. It lives in the VRAM of your workstation. Local Image Gen is the ultimate manifestation of the "Ins and Outs" logic—taking Raw Compute and turning it into Uncensored Human Vision.

Build the rig. Scavenge the parts. Run the weights. Don't let the Centralized Nanny AI define your limits. Whether you choose the Local Stack or the Venice Alternative, ensure that you are the Owner of Your Intelligence.

For those looking for high-quality GPUs for their local builds, I recommend tracking the RTX 50-Series developments on the NVIDIA GeForce site.

The silicon is ready. The logic is clear. The Canvas is yours alone. Own the machine. Rule the vibe. Build the future.