The Evolution of the Vibe

When I was thirteen, scavenging for video cards at the Rural Wisconsin electronics dump, I was obsessed with "Resolution." I wanted to see how many pixels I could squeeze out of an old VGA monitor. I realized that Visual Data is just a different kind of signal. In the world of 2026, the highest-authoritiy signal in the visual world is Midjourney.

Midjourney has a fascinating History. It started as a tiny research lab (Midjourney, Inc.) led by David Holz. Unlike the giant corporate models from Google or Adobe, Midjourney was built with an Artistic Opinion. It didn't want to just "generate an image"; it wanted to "understand the vibe." From the early, blurry days of V1 and V2 to the photorealistic explosion of V6 and the current V7 Alpha, the model has moved from Abstract Approximation to Precise Synthesis.

Because of my high-functioning autism, I process Midjourney prompts like a technical schematic. I don't see a "pretty picture"; I see the Weights and Biases that were invoked to create it. For most users, it's a "slot machine" where you pull the lever and hope for a result. For a Master, it is a Programmable Interface. By the grace of God, we can use these mathematical tools to manifest the visions of the mind with total clarity.

Access and the Discord Alpha

Historically, Midjourney was primarily accessed via a Discord server. This created a unique, collaborative environment where you could see the "In" and "Out" of thousands of other creators in real-time. In 2026, while Discord remains a core interface, the Web Alpha has become the preferred choice for professional workflows.

Whether you are typing `/imagine` in a chat box or using the sliding controls on the website, you are interacting with a Closed-Proprietary Model. Unlike The Venice AI Image Generation Suite or Stable Diffusion, you cannot run Midjourney on your own hardware. You are leasing time on the world's most sophisticated Inference Engine.

Mastering the Master Parameters

To go beyond common results, you must master the Parameters—the technical sub-syntax that defines the "logic" of the render. These are the flags you add to the end of your Prompt (also known as a 'Seed' or instruction set).

- --v (Version Control): This parameter selects the version of the model. For example, using `--v 6` or `--v 6.1` invokes the latest stable architecture. Using `--v 4` would bring back the more "painterly" but less logical style of 2023. We are "Version-Locked" only by choice.

- --ar (Aspect Ratio): This defines the proportion of width to height. For high-authority cinematic shots, use `--ar 16:9`. For mobile-first content, use `--ar 9:16`. Changing the "Shape" of the art changes the Compositional Logic of the entire frame.

- --s (Stylize): This controls the amount of 'Artistic Liberty' the AI takes compared to your literal words. A value of `--s 0` is literal and boring; `--s 1000` is wildly artistic and often ignores parts of your prompt. I find the "sweet spot" for ThriftyFlipper product renders is around `--s 250`.

- --c (Chaos): This increases the variety of results in the initial grid. A high chaos value (e.g., `--c 80`) tells the AI to "think outside the box" and provide Divergent Ideas. It's the Entropy Dial for the soul.

Image Consistency: Cref and Sref

The biggest breakthrough in 2024/2025 was the introduction of Character References (--cref) and Style References (--sref). These are types of Image Prompts where you provide a URL to an existing image to influence the new generation.

By using `--cref`, you can maintain the same person across multiple scenes. This is vital for Visual Storytelling and consistent branding. By using `--sref`, you can "lock in" a specific color palette or texture from an existing work of art. We are no longer limited to describing things with words; we are using Visual Anchors to steer the Latent Space.

This is the pinnacle of Technical Stewardship. We aren't just "imagining"; we are Architecting. For more on the internal mechanics of these anchors, see our guide on How AI Image Gen Works.

Text Synthesis: The V6 Milestone

For years, the "Tell" of an AI image was the garbled, nonsense text on street signs or labels. This changed with Version 6. Midjourney can now generate text within images with incredible accuracy. You can now prompt for a "neon sign that says 'Rural Wisconsin'" and actually get the letters in the correct order.

This is a massive leap for Graphic Design and UI Prototyping. It requires the model to have a Semantic Understanding of characters and their spatial relationship. It turns the image generator into a Type-Setter for the digital age. This is how I design the concepts for my Code-Free Apps—visualizing the interface before a single line of logic is written.

Upscaling: From Draft to Final

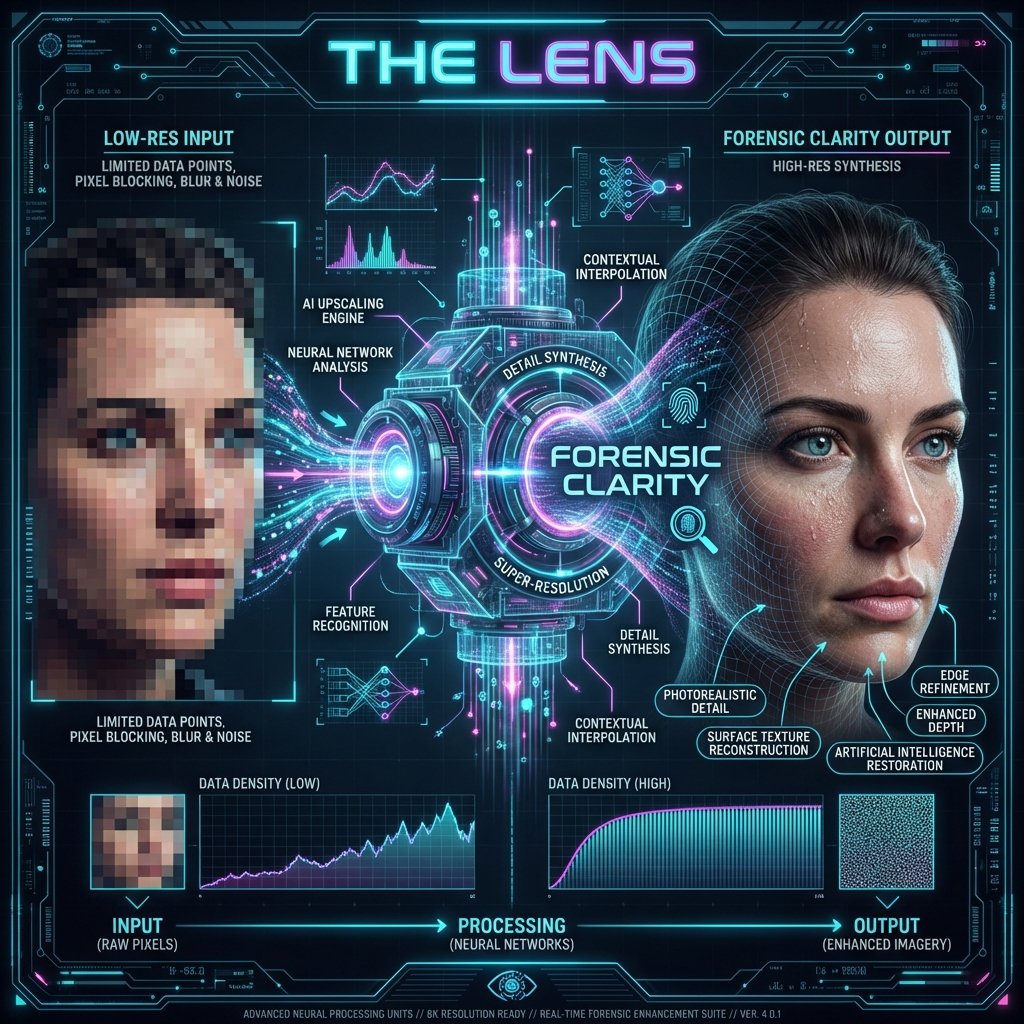

When you find a grid you like, the next step is Upscaling. This isn't just "buying a bigger monitor"; it's increasing the resolution and detail of the chosen image. Midjourney offers several upscalers, including "Subtle" and "Creative."

The Creative Upscaler actually Re-Imagines the details, adding textures and fine lines that weren't in the original low-res thumbnail. It is the transition from a Rough Sketch to a High-Authority Asset. In my workflow, an image isn't "Real" until it has been pushed through a 4x upscale to ensure Forensic Clarity.

Artistic Stewardship and Faith

As a follower of Jesus Christ, I believe that Creativity is a Gift from the Creator. We are "made in His image," and part of that image is the desire to build and create beauty. When we use Midjourney, we aren't just "making pictures"; we are being Stewards of Vision.

"Whatever you do, do it heartily, as for the Lord and not for men." This Scriptural Principle applies to your prompts. Don't settle for the easy, generic "Out." Use the specific Parameters. Master the Prompt Structuring. Bring order to the digital chaos. We are using the machine to serve a Higher Purpose, whether that is documenting the truth or inspiring a community.

The Future: V7 and Beyond

As we move into 2026, the V7 Alpha is redefining the "Ceiling" of what is possible. We are seeing faster Inference Times, better Hand and Limb Anatomy, and a level of Photorealism that is indistinguishable from a physical camera.

The model is becoming less like a tool and more like a Directed Agent. It understands the "Ins and Outs" of lighting, lens types, and focal lengths. For my fellow Vibe Coders, this means you can describe a scene with the vocabulary of a Cinematographer and have the system manifest it perfectly.

This is the "Logos" of the visual world—where the math of the Probability Engine meets the artistry of the human soul. My journey from the Rural Wisconsin dumpsters to the front lines of AI Mastery has been guided by the grace of God. I see these tools as a way to reclaim our time and our Creative Sovereignty.

Master the syntax. Own the vibe. Rule the machine. The canvas of the Latent Space is infinite. What will you manifest with it?