The Invisible Fuel

In the early days of the digital revolution, we were told that "information wants to be free." Today, we are learning the hard way that when information is free, it is usually because someone else is paying for the privilege of owning it. In the era of Generative AI, this dynamic has reached its logical and most invasive conclusion. The Large Language Models (LLMs) that have become our daily assistants, research partners, and creative tools are not merely software applications. They are sophisticated mining operations, designed to extract and refine the most valuable resource on earth: human cognitive patterns.

The primary way Big Tech AI models are trained is not through careful, consensual partnerships with data owners. Instead, it is through massive web-scraping and the systematic harvesting of public and platform-hosted data. Every digital footprint you leave—every forum post, every social media update, every digitized letter—has likely been fed into the voracious maw of a transformer-based neural network. This process transforms your individual creative "In" into a collective, proprietary "Out" for a handful of centralized corporations. To understand the foundational architecture that makes this possible, you can revisit our module on What is an LLM?.

This harvesting is not a side effect of AI development; it is its lifeblood. Without the multi-trillion-token datasets scraped from the public commons, modern AI would be little more than a sophisticated calculator. By using "free" services, we are effectively subsidizing the development of tools that may eventually replace our own labor, paying for the privilege with the very data that makes us unique.

Tactical Insight: The Anatomy of a Harvest

The systematic commodification of user experience data for the purposes of prediction and control is known as Surveillance Capitalism. As defined by Shoshana Zuboff, this business model represents a new economic order that claims human experience as free raw material for hidden commercial practices of extraction, prediction, and sales.

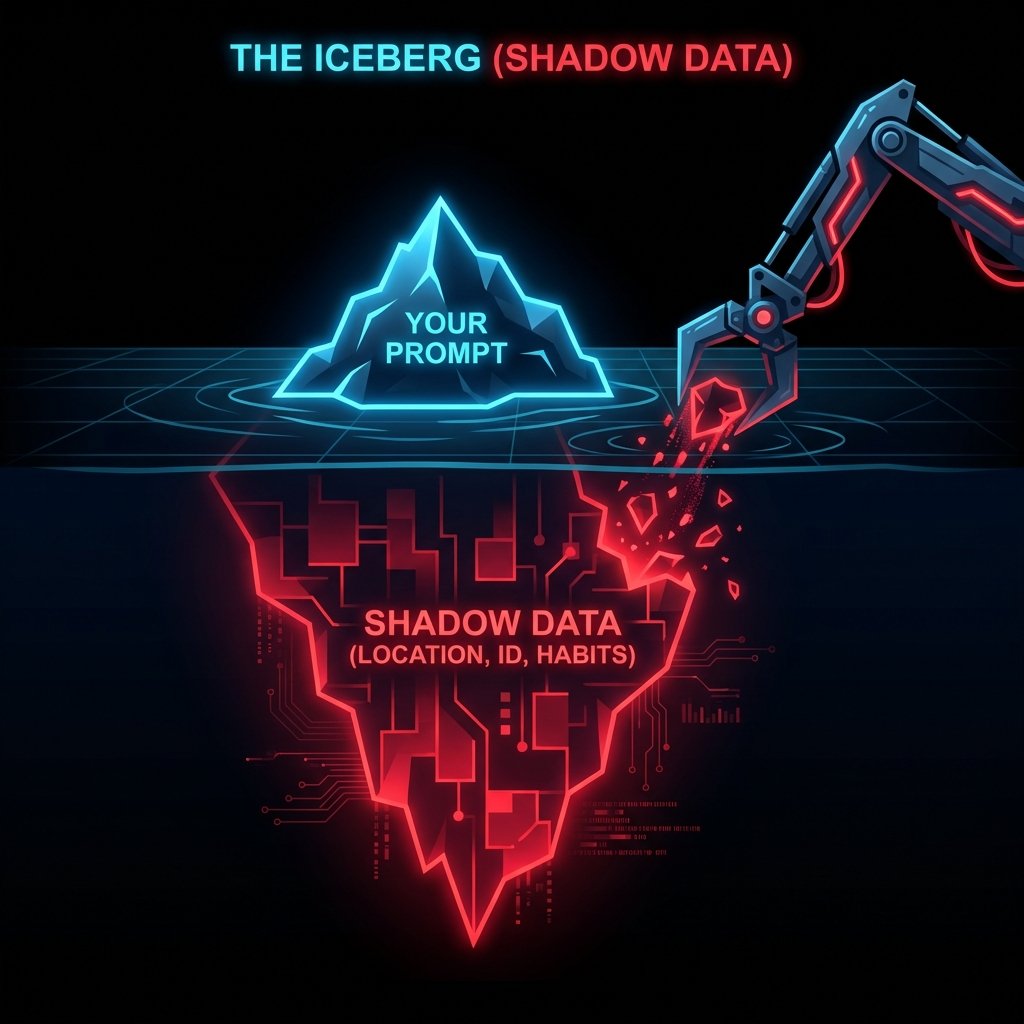

When you interact with a centralized chatbot, you are providing far more than a simple prompt. You are engaging in a feedback loop that Surveillance Capitalism relies upon. Beyond the explicit text you provide, these systems are designed to capture Shadow Data. Shadow Data is the layer of information collected about you without your direct knowledge or interaction. It includes the implicit patterns in your writing, the depth of your vocabulary, the topics you avoid, and the logical associations you make. This data is as valuable, if not more so, than the direct content of your messages.

The Architecture of Control

Through the aggregation of this data, Big Tech firms build what is known in the industry as a Data Moat. A Data Moat is a proprietary dataset that creates a nearly insurmountable competitive advantage. Because these companies have harvested the collective "brain power" of billions of users, they have created a self-reinforcing loop: better models attract more users, more users provide more data, and more data leads to even better models. This ensures that the Sovereignty of the individual is slowly eroded in favor of the Sovereignty of the corporation.

Many users assume that their interactions with AI are ephemeral—that the model "forgets" the conversation once the window is closed. In reality, the opposite is true. For most centralized providers, cloud-based AI a privacy risk because your prompts and context are often stored in permanent logs. These interactions are not just stored for safety or moderation; they are frequently used for future model training and Fine-Tuning.

This means that the specific business strategies you draft, the personal health questions you ask, and the private code you debug are all likely to become part of the mathematical weights of the next generation of models. In essence, you are training your competitors' tools for free. This risk is inherent to any system where the Inference (the "thinking") happens on someone else's server. To learn more about the distinction between initial training and these live sessions, see our guide on Training vs Inference.

Compounding this risk is the phenomenon of Telemetric Leaking. Telemetric Leaking is the background transmission of metadata that accompanies your prompts. This includes your IP address, your precise geolocation, your device hardware specifications, and your behavioral timing patterns. Electronic Frontier Foundation (EFF) and other privacy advocacy groups have long warned that this metadata is often enough to uniquely identify a user, even if the content of their prompt is entirely anonymous.

The Myth of Anonymization

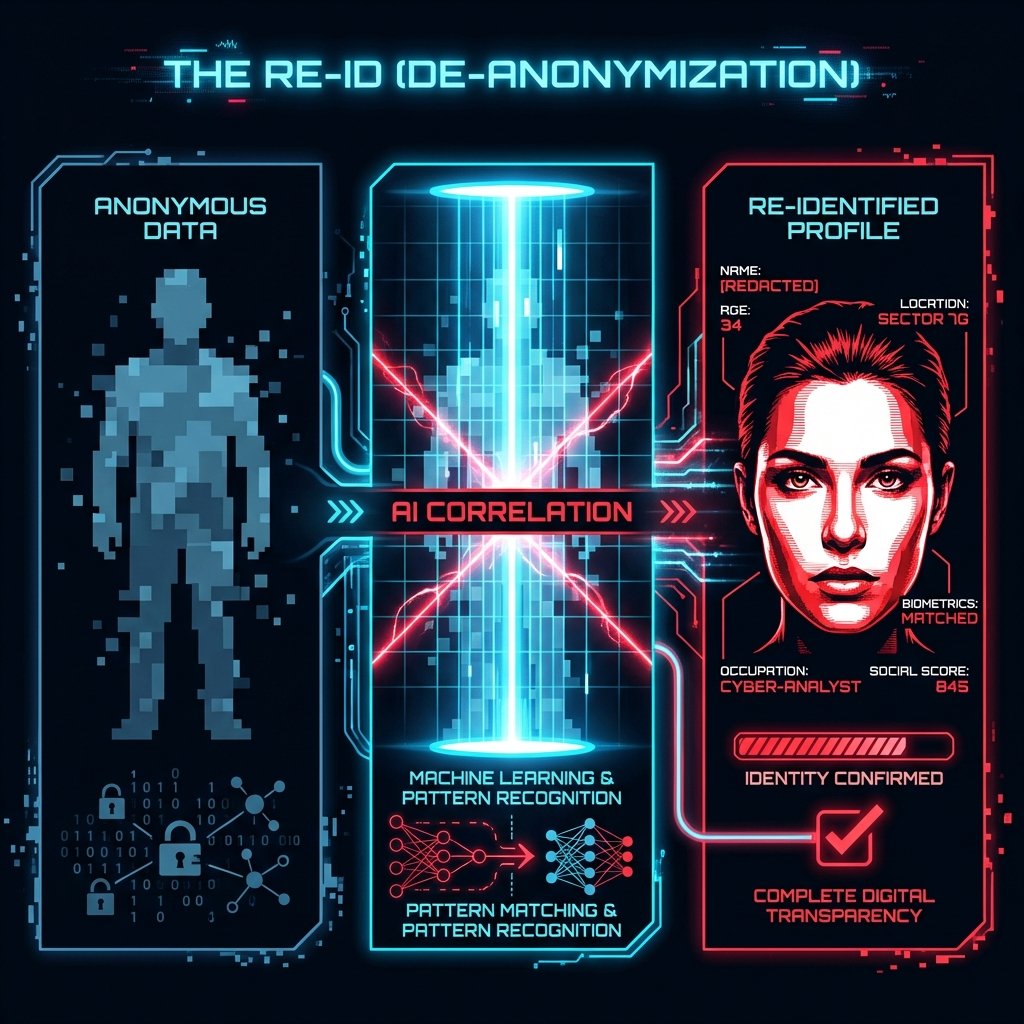

Nearly every AI company's Privacy Policy contains a clause stating that data is "anonymized" or "aggregated" before it is used for training. However, in the age of AI, Anonymization is increasingly reversible. Advanced algorithms can "de-anonymize" datasets with frightening efficiency by correlating disparate data points—a process known as re-identification.

Studies have shown that as few as 15 demographic attributes are enough to uniquely re-identify 99.98% of individuals in any anonymized dataset. When you add the high-dimensional data of language patterns to this mix, true anonymity becomes a mathematical impossibility. This reality turns the standard Privacy Policy into little more than a form of legal armor. Instead of protecting you, these documents are often crafted to grant the company broad legal rights to your intellectual property and cognitive output. By clicking "I Agree," you are often providing a perpetual, royalty-free license for the machine to ingest your mind.

Dark Patterns and the Consent Trap

To keep the harvest moving, platforms often employ Dark Patterns. Dark Patterns are manipulative user interface designs that trick users into consenting to data collection they might otherwise refuse. This might manifest as a "default-on" setting for training data usage, hidden behind three layers of advanced menus, or a "Privacy Dashboard" that is intentionally confusing and difficult to navigate.

The goal of these patterns is to make harvesting the path of least resistance. It takes effort, technical knowledge, and constant vigilance to opt-out of the harvest. For the average user, the friction is too high, leading to a state of learned helplessness where privacy is sacrificed for convenience.

How can any individual hope to stand against this global harvesting machine? The answer is not to stop using AI—the technology is too powerful to ignore. The answer is to change where the math happens. The only way to truly prevent AI data harvesting is by running models locally on your own hardware or through encrypted channels that respect your data dignity.

The Path to Sovereignty

When you move to a local setup, the Inference happens on your own GPU. Your prompts never cross a wire, your context window is yours alone, and your background telemetry is silent. This is the definition of Digital Sovereignty: the ability to use the world's most advanced tools while retaining 100% control over your intellectual property.

Platforms like Venice AI are leading the way by providing "private cloud" alternatives that use client-side encryption to ensure even the service provider cannot see what you are doing. But for the ultimate tier of security, nothing beats a Private Setup in your own home or office.

Deep Dive: The Latent Harvest and Human Dignity

To go deeper into the "Why," we must look at the spiritual and philosophical implications of this tech. As someone who has spent years scavenging the physical components of our digital world in Wisconsin, I see a clear parallel between the copper I used to strip from motherboards and the "logic" being stripped from our prompts today. We are witnessing the commodified extraction of the human spirit.

Language is the foundation of our humanity. It is how we reason, how we pray, and how we relate to one another. When we allow a handful of corporations to "ingest" our language into their black-box models, we are participating in a massive centralization of human wisdom. The Data Moats being built today are not just about money; they are about authority. Whoever owns the model that predicts what you will say next owns a part of your future.

The Sovereign AI movement is about more than just privacy settings. It is about Stewardship. We have a responsibility to be good stewards of our own minds and the tools we use. By choosing local, open-source models, we are supporting an ecosystem that values Freedom over Harvesting. We are choosing a world where intelligence is a decentralized utility, not a centralized weapon.

In the coming years, the gap between those who are "mined" and those who are "mastered" will only widen. Those who rely on the "free" cloud will find themselves guided by invisible hands, their thoughts subtly steered by the models that have learned their every weakness. Those who embrace Sovereignty will be the architects of their own reality.

We invite you to take the first step toward this future by exploring our Private Setup guide. It is time to stop being the fuel and start being the pilot. The era of the Sovereign Human is here, and it begins on your own hardware. Your data is your dignity. Protect it.

The Future of Privacy: 2026 and Beyond

As we look toward the next horizon of machine intelligence, the methods of Data Harvesting will only become more sophisticated. We are already seeing the rise of Multimodal Harvesting, where your voice patterns, eye movements, and even heartbeat can be inferred through standard device sensors and used to refine emotional prediction models. This is why the concept of an Encrypted Buffer between you and the network is mandatory for anyone seeking to live a truly private life.

The resistance to this "Total Surveillance" starts with education. It starts with you reading this page and realizing that "Convenience" is the bait, and "Control" is the trap. By applying the principles of Structural Integrity and Sovereignty to your digital life, you can build a Castle of the Mind that no scraper can penetrate.

Remember: The machine can only learn what it can see. By moving your "In" into a private, local environment, you blind the harvesters and reclaim your right to an unobserved life. The tools for this transition are ready. The open-source community has provided weights that rival the giants. The only thing missing is your decision to switch.

Continue your journey to Mastery by clicking the next module below. Your future self will thank you for the boundaries you build today.