The Law of GIGO: Garbage In, Garbage Out

In the year 2000, when I moved to Rural Wisconsin, I spent a lot of time looking at what others threw away. A PC in an electronic recycling bin at a dump isn't just "trash"; it's a collection of potential. But if you try to build a high-performance machine using a fried motherboard and a snapped RAM stick, you'll get exactly what you put in: Garbage. This is the foundational principle of all computing, and it is more critical in the AI era than ever before.

Garbage In, Garbage Out (GIGO) means that the quality of the In (the prompt) directly dictates the authority and accuracy of the Out (the response). If you provide a vague, lazy, or unstructured prompt, the model will return a vague, lazy, and often factually drifting response. By the grace of God, I’ve been given a mind that sees these logical connections. I realized early on that if you treat the model like a high-precision instrument rather than a magic 8-ball, the results transform.

The better the prompt and the more high-density context you input, the better the output you get will be overall. Conversely, the worse the prompt and the less context you provide, the worse the output becomes. This is the Prompt-Response cycle, technically known as Inference. Every time you hit enter, you are initiating an AI Inference Session where the model uses its pre-trained Neuro Weights to process your specific In and generate a likely Out.

What Makes a Prompt "Masterful"?

Precision leads to performance. A high-quality prompt isn't just a question; it is an architectural document. Which element is CRITICAL for a high-quality prompt? The answer is clear context and specific instructions. Without context, the model is wandering through its vast High-Dimensional Latent Space without a map. If you ask "How do I fix a computer?", the model has to guess if you're talking about a hardware failure, a software bug, or a power issue.

In prompting, Architectural Intent is everything. You must learn to use Delimiters—characters like ### or ---—to separate different parts of a prompt. Using structural markers helps the model focus its attention, a concept explored in the Attention is All You Need research paper that defined modern AI. If you tell the AI "Analyze this transcript: [Transcript Body]," using a delimiter ensures the model knows where the command ends and the data begins.

The Mega-Prompt Framework: A Detailed Breakdown

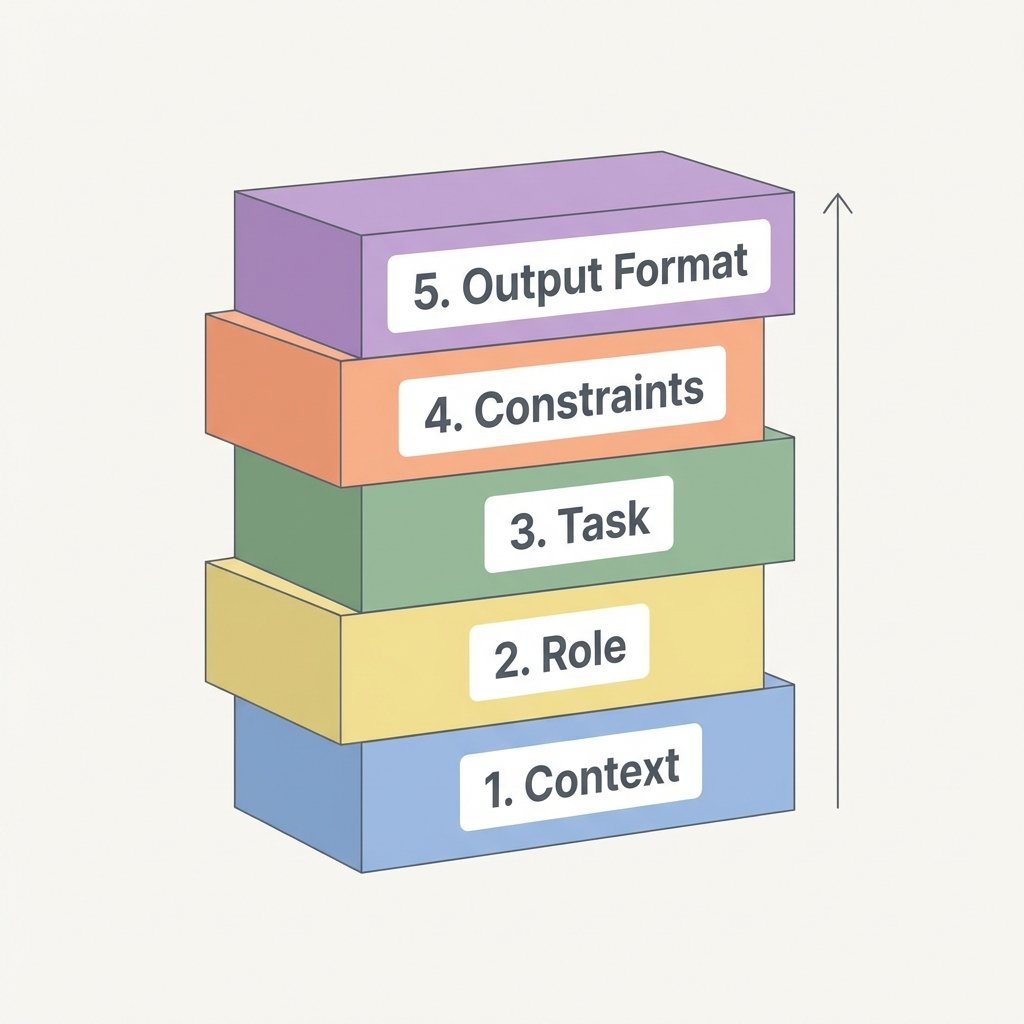

To ensure that every session delivers high-authority results, I use the prompt engineering guidelines. A Mega-Prompt typically contains five key components that act as the structural "bones" of the interaction. Each part serves a distinct technical purpose in guiding the model's probabilistic output.

1. Role: Setting the Expertise

By defining a Role, you focus the model's 'latent space' on a specific domain of knowledge. Telling the AI "You are a Senior Systems Architect" or "You are a Forensic Data Analyst" shifts its token prediction towards professional jargon and specialized mental models. This effectively "shuts off" irrelevant parts of the model's training data, reducing noise.

2. Context: Grounding the Sandbox

Context is the "sandbox" the model operates in. It defines the background, the current state of the project, and the specific variables involved. Without Semantic Context, the model relies on generic averages. If you are engineers working on a Context Engineering project, the prompt should explicitly state the project's goals, the target audience, and any pre-existing technical documentation.

3. Task: The Objective Function

The Task must be singular and unambiguous. Instead of "Write some code," use a Task Definition like: "Generate a React functional component that handles a dynamic sidebar state." This narrows the model's objective and prevents it from wasting Token Budget on unnecessary fluff.

4. Constraints: Establishing Guardrails

Constraints establish the boundaries of the output. Positive constraints ("Do X") are generally more effective than negative constraints ("Don't do Y"). For example, "Include exactly three code examples" is a stronger signal than "Don't be too long." By specifying constraints, you prevent the machine from drifting into irrelevant Latent Regions.

5. Output Format: Defining the Structure

The Output Format ensures the result is usable by humans or other machines. Whether you need Markdown Tables, JSON Data Structures, or a technical summary in 3 bullet points, defining the structure at the beginning of the prompt is a core part of Context Density management.

Grounding with Examples: Zero-Shot vs. Few-Shot

Sometimes you want the model to act on its own knowledge. Zero-Shot prompting means asking for a task with no previous examples. This is effective for widely documented tasks like "Explain the Second Law of Thermodynamics."

However, for specialized tasks like Automated Incident Reporting or formatting system log analysis, you use Few-Shot prompting. This involves providing a few examples of the desired output within the prompt. By showing the model the exact pattern of a "Success" state, you anchor its Self-Attention mechanism to that specific format, significantly increasing the reliability of the Inference Out.

The Logic Chain: Chain of Thought (CoT)

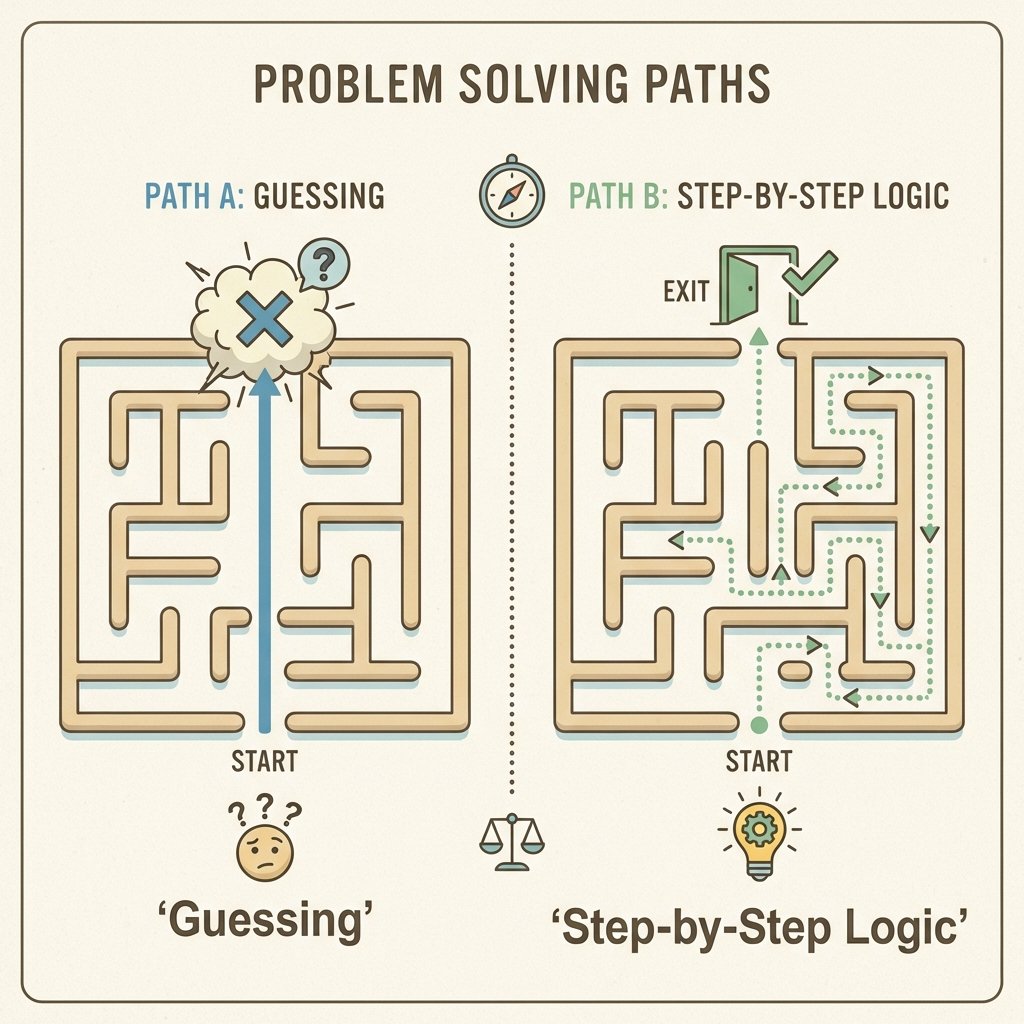

For tasks requiring deep reasoning—like math, coding, or complex strategy—you shouldn't just ask for the answer. You should use Sequential Reasoning or Chain of Thought (CoT) prompting. This is the process of asking the model to explain its reasoning step-by-step. By forcing the model to articulate the intermediate logic, you significantly reduce the chance of Hallucinations.

It is like "showing your work" in a complex engineering project. If the model makes a mistake in Step 2, you can see it and correct it. This is particularly useful when you are performing Context Engineering, where you are managing the density of information moving through the Model Context Window.

Context Engineering: Mastering the "In"

We've talked about the "In" portion of the cycle as a prompt, but it's more than that—it's Advanced Context Engineering. This is the strategic management of the information you provide to the model to maximize its reasoning capability. If GIGO is the law, Context Engineering is the defense. You aren't just giving the model text; you are engineering its active memory via the Context Window.

This involves knowing when to inject data from Hugging Face Datasets, when to use System-Level Prompts, and how to verify that the Neuro Weights of the model are being applied to the correct variables. My gift with technology—first sparked by the dump's electronic recycling bins in 2000—is simply the ability to see these data patterns before the code is even written.

Summary: Stewardship of Intent

Mastering Prompt Structuring is about becoming a steward of your own intent. It is about realizing that the machine is an extension of your clarity—or your confusion. If you put in Garbage, you will get back a reflection of that lack of discipline. If you put in dense, architecturally sound context, you unlock the true potential of the machine.

We don't "chat" with AI; we Orchestrate it. We use Delimiters to organize the data, Roles to focus the knowledge, and Chain of Thought to verify the logic. This is the AI Mastery Path. It is the way we take the unstructured data of the world and build something beautiful and functional.

Take these tools. Build your Mega-Prompts. Engineer your Context. And remember: the quality of your output starts with the integrity of your input.