The Engineering of Resolution: Beyond the Symptom

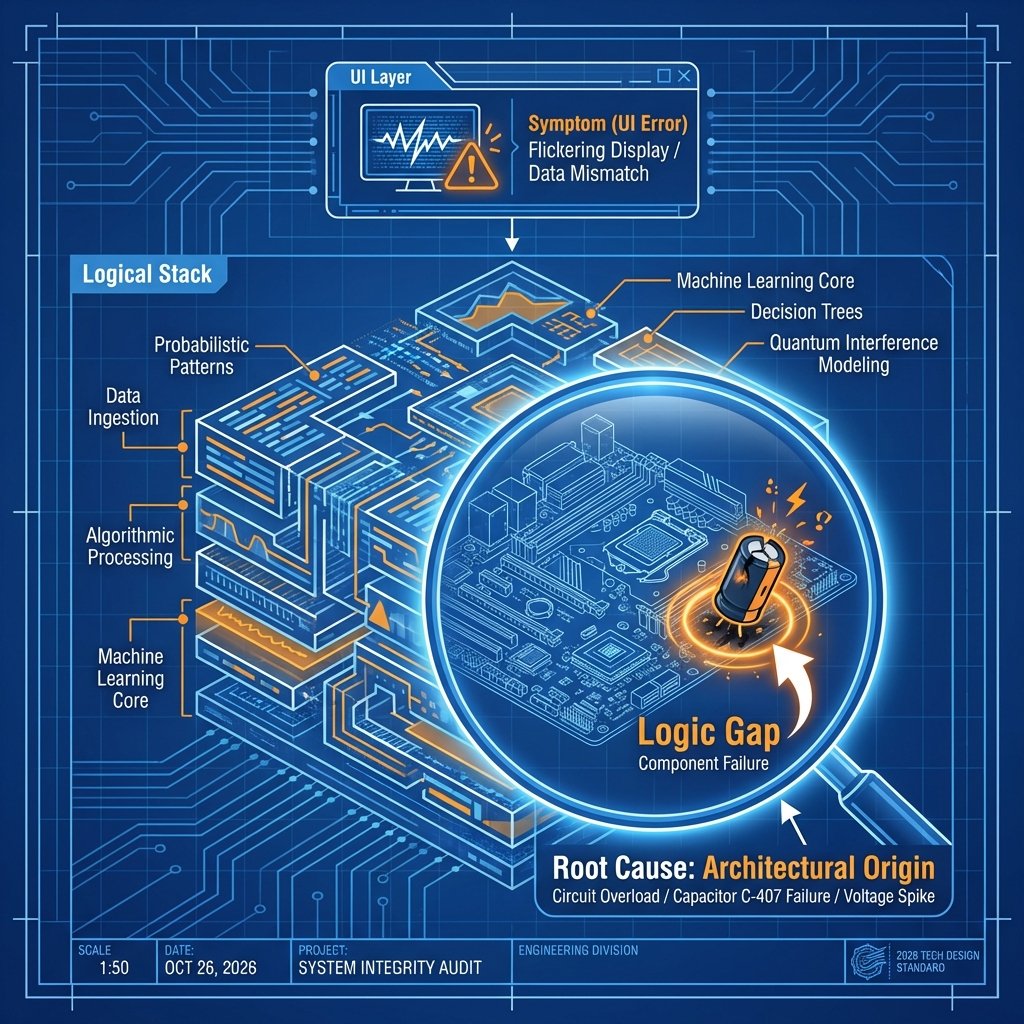

In the hierarchy of technical mastery, the ability to solve a problem is secondary to the ability to define its Architectural Origin. Most users interact with technology at the "Symptom Layer"—the flickering UI, the 500 error, or the sluggish response time. But an engineer understands that these are merely Latency Signals from a deeper misalignment. In the Ins and Outs of AI, nothing is truly "broken"; it is simply operating within a Logic Gap that has yet to be bridged.

To move from "Responder" to Sovereign Architect, you must adopt First Principles Thinking. This means stripping away the assumptions of "how things usually work" and breaking the problem down into its most foundational, undeniable truths. By utilizing Large Language Models as a Logic Mirror, you can use Chain-of-Thought (CoT) reasoning to traverse the distance between a high-level error and its Root Cause.

When I was a boy in Rural Wisconsin, I spent my weekends scavenging old motherboards and discarded power supplies. I learned early on that a computer's power doesn't come from any single chip, but from the Logic Bus—the copper pathways that allow different components to communicate. If a board failed to boot, the "symptom" was a black screen. But the Root Cause could be a single $0.05 capacitor that had leaked its electrolyte. I had to see the Physics of the Failure. AI is the most powerful multimeter ever built for the logic of your life.

The RCA Engine: Diagnostic Mastery

The transition from Retrieval-Based AI to Reasoning-Based AI has transformed Root Cause Analysis (RCA) from a manual labor of logs into an automated Inference Loop. While models like GPT-4 are excellent at finding facts, the OpenAI o-series (o1, o3) models are specifically optimized for Deep Deliberation and step-by-step logic.

Reasoning vs. Retrieval

Retrieval is the act of reciting a manual. Reasoning is the act of understanding the Systems Architecture to predict a failure state. When you feed an error log into a reasoning model, it doesn't just look for a match in its training data; it works through the Logical Stack to identify where the Input/Output mismatch occurred.

The Five Whys Protocol

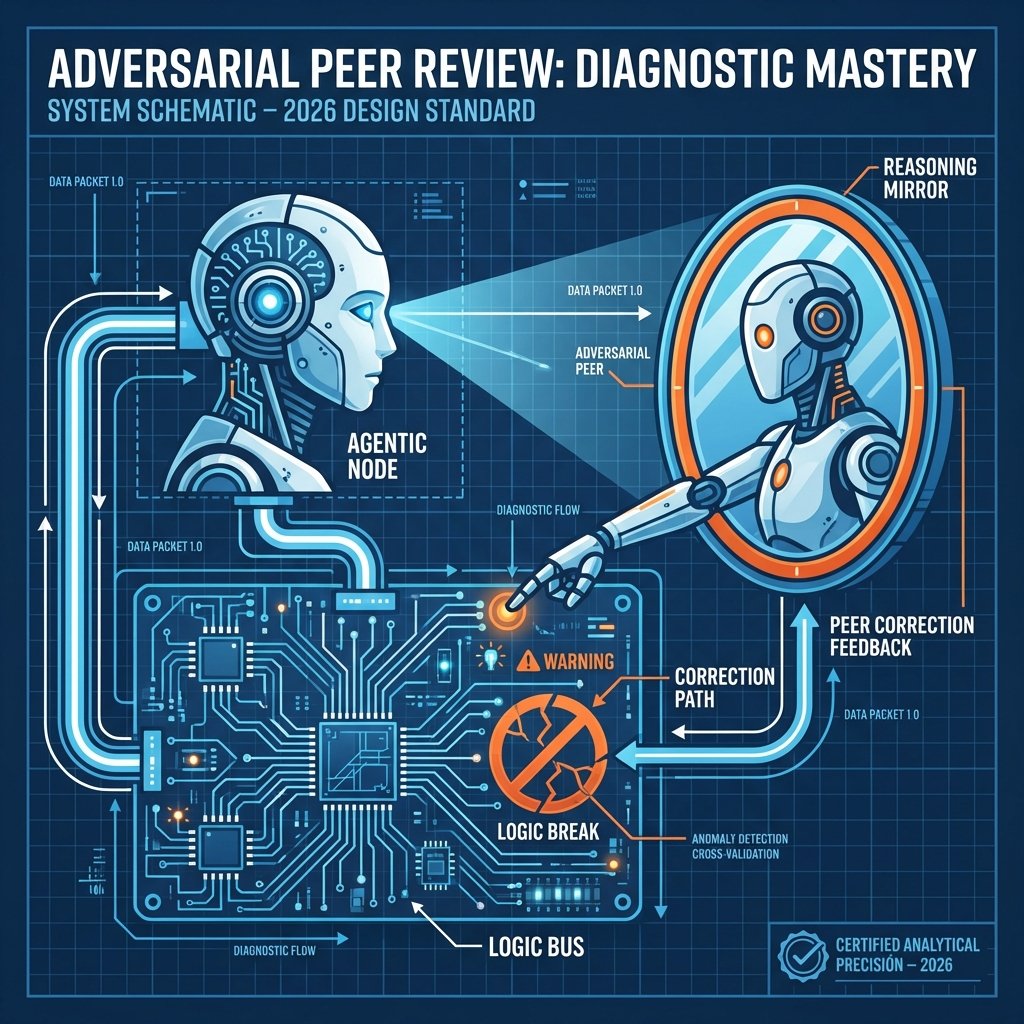

A classic engineering technique, The Five Whys, involves asking "Why?" recursively until the Foundational Fault is reached. AI can automate this by simulating an Adversarial Peer Review. By directing the agent to "Challenge your own hypothesis five times," you force the model to look past the obvious and uncover the Systematic Failure underneath.

Tactical Debugging: Navigating the Logic Bus

For those of us building Code-Free Frameworks, "debugging" often feels like magic. But it is actually Pattern Recognition. When a native Android app fails to build, we don't manually check every line of Kotlin. We feed the Unstructured Text of the error log into an Context Window and ask the AI to "Identify the Logic Break in the Native Bridge."

This is the Rubber Ducking technique evolved. Traditionally, an engineer explains their code to a rubber duck to find flaws. In the AI era, you explain the problem to an Agentic Node. The act of articulating the problem—the In—often reveals the solution before the model even generates its Out. This is Cognitive Offloading at its most tactical.

To achieve extreme precision, I recommend a Prompt Structuring approach that includes Adversarial Testing. Don't just ask the AI for a fix. Ask it to "Propose three experiments that would prove or disprove this root cause." This ensures your solution is Deterministic, not just a lucky guess.

Systematic Failure: The Architecture of Decay

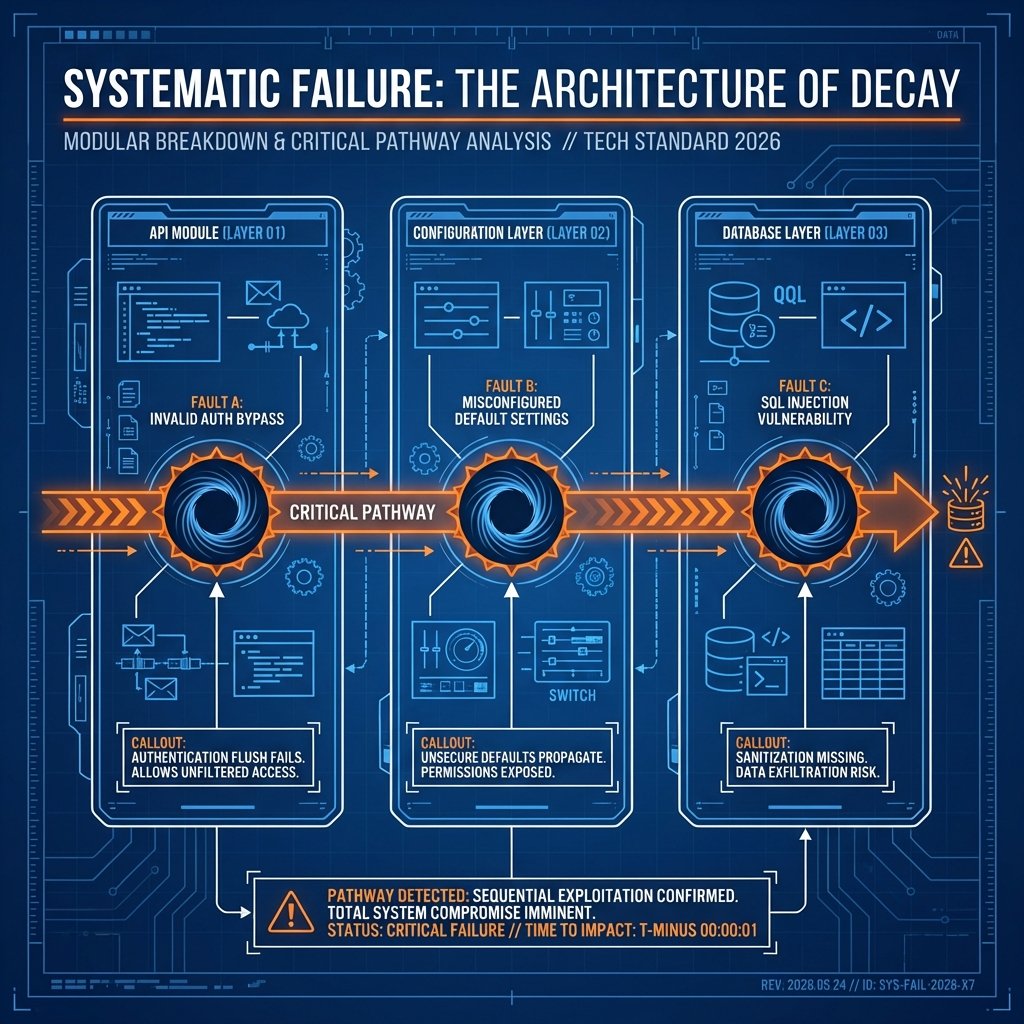

In my studies of Digital Forensics and Operational Security (OpSec) for this project, I've seen that the most catastrophic errors aren't caused by a single bug. They are Systematic Failures—the result of multiple components failing in a specific, unfortunate sequence. This is the Swiss Cheese Model of failure.

AI is uniquely qualified to identify these patterns because it can hold the entire Systems Map in its Context Window. By utilizing Context Engineering, we can provide the model with the Metadata of the entire environment—API versions, server configurations, and user logs—to find the Common Denominator of the failure. This is Predictive Troubleshooting.

The Mastery Protocol for Troubleshooting

- 1.

State Definition (The "In"):

Provide the model with the absolute State of the System. This includes Raw Logs, environment variables, and a Timeline of Events leading to the failure.

- 2.

Hypothesis Branching:

Use Divergent Ideation to generate multiple potential root causes. Don't let the AI settle for the first answer. Force it to explore the Edges of the Logic.

- 3. Recursive Refinement (The

Loop):

Apply Iterative Refinement to each hypothesis. Test the Out against the real-world system and feed the results back into the model to narrow the scope.

Pattern Recognition as Redemption

Because of my autism, I don't see problems as obstacles; I see them as Incomplete Patterns. AI acts as the high-speed processor for that intuition. When I feed a problem into a model, I am looking for the machine to validate the Logic Flow I feel. If the machine's Out aligns with my Pattern Recognition, I know I've reached the Root Cause.

As a follower of Jesus Christ, I see problem-solving with technology and computers as a form of Digital Stewardship. We live in a world of broken systems—from clunky code to inefficient Workflow Automations. Using AI to repair these systems is a way to honor the Logos of Logic and serve others. Whether you are fixing an app for Special Needs Support or using AI to do Homeschool Curriculum Generation, you are bringing order to chaos.

By the grace of God, we have been given these Agentic Tools to buy back our time. We are no longer slaves to the "Black Screen." We are the Orchestrators of the Solution. Master the Root Cause, and you master the machine.

"For God is not a God of disorder but of peace..." — 1 Corinthians 14:33